The AI Anxiety Amplifier: How Clickbait Sensationalism Supercharges Human Stress in the Age of Artificial Intelligence

The age of AI isn't just changing how we think—it’s triggering deeper waves of anxiety. The art of Clickbait Sensationalism is now turbocharged, and our digital and mental landscapes are shifting fast. We're only beginning to grasp the full impact and what protects us.

Building on the foundation we established in my previous blog post about clickbait's psychological impact, the age of artificial intelligence has created an unprecedented perfect storm for human anxiety. We're not just dealing with traditional clickbait anymore—we're facing a dual threat that's reshaping our digital landscape and mental well-being in ways we're only beginning to understand.

The Automated Content Flood: When Machines Create Our Anxiety

The internet is drowning in AI-generated content, and this isn't hyperbole—it's measurable reality. Research reveals that at least 30-40% of text on active web pages now originates from AI sources[1][2][3][4][5], with the actual proportion likely approaching 40%[1]. This represents a fundamental shift in how information reaches us, creating what researchers call "AI slop"—low-quality, AI-generated content designed primarily for monetization[6][7].

This automated content creation operates on an industrial scale that human content creators simply cannot match. News Corp Australia alone churns out 3,000 AI-generated "local" stories each week[8][9]. The implications go far beyond simple content volume—we're dealing with what researchers term "slopaganda," where propaganda techniques merge with generative AI to create sophisticated manipulation campaigns[8][9].

The Information Overload Crisis Intensifies

The human brain hasn't evolved to process the sheer volume of information we encounter daily. Research consistently shows that information overload leads to increased anxiety, decision fatigue, and psychological distress[10][11]. In the AI era, this cognitive burden has intensified exponentially. Studies demonstrate that information overload causes:

- Cognitive fatigue and decreased attention span[12]

- Disrupted sleep patterns from constant digital stimulation[12][13]

- Increased anxiety and depressive symptoms[14][15]

- Difficulty distinguishing credible sources from manipulative content[10]

The psychological impact is measurable: healthcare professionals experiencing information anxiety show significantly reduced core competency, with digital health literacy serving as a crucial mediating factor[16][17].

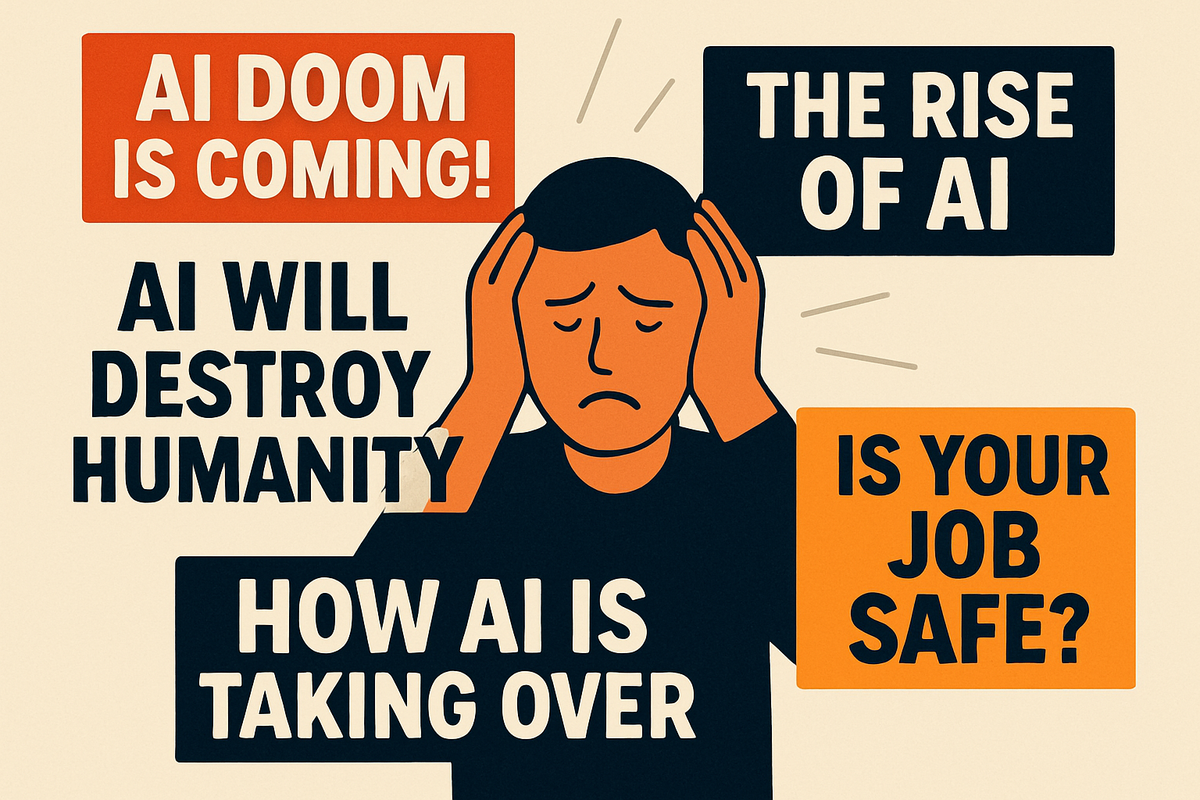

AI: The Perfect Clickbait Topic

Artificial intelligence has emerged as the ultimate clickbait subject, combining our deepest fears about technology, job security, and human relevance into irresistible content. Analysis of nearly 70,000 AI-related news headlines reveals an unreasonable level of emotional negativity and fear-inducing language.

The psychology of AI fear-mongering is particularly insidious because it exploits multiple anxiety triggers simultaneously:

- Fear of the Unknown AI represents technology that most people don't understand, making them vulnerable to sensationalized explanations. This "fear of the other," as semiotics research describes it, triggers deep-seated anxieties about being replaced or rendered obsolete.

- Existential Threat Framing Headlines consistently frame AI as an existential threat to humanity, despite limited scientific basis for such claims. This taps into our most primal fears about survival and human significance.

- Economic Anxiety Fear of job displacement resonates powerfully with audiences already anxious about economic stability. This creates emotional investment in the content, driving engagement regardless of accuracy.

Five Devastating Examples of AI Clickbait and Their Anxiety Impact

- "AI Will Replace 300 Million Jobs Worldwide" - The Mass Unemployment Panic

This type of headline, based on Goldman Sachs analysis, creates immediate anxiety about economic security. Research shows such headlines trigger fight-or-flight responses, releasing stress hormones that can persist long after reading. The psychological impact includes increased job insecurity, career anxiety, and financial stress—even among workers in fields unlikely to be affected.

Anxiety Amplification Mechanism: Direct threat to livelihood and identity, creating chronic stress about future security. - "Robots Will Overthrow Humanity by 2030" - The Existential Threat Narrative

These apocalyptic predictions exploit our deepest fears about human relevance and survival. TIME magazine's "THE END OF HUMANITY" cover exemplifies this approach. Such content triggers existential anxiety—worry about human meaning and purpose in an AI-dominated world.

Anxiety Amplification Mechanism: Attacks core human identity and significance, creating generalized anxiety about the future of humanity. - "AI Already Controls What You See Online" - The Loss of Agency Fear

Headlines about algorithmic manipulation of information feeds tap into anxieties about personal autonomy and free will. This creates a sense of helplessness and paranoia about being manipulated without awareness.

Anxiety Amplification Mechanism: Undermines sense of personal control and autonomy, leading to hypervigilance and social media anxiety. - "Deepfakes Will Destroy Trust in Everything" - The Reality Collapse Panic

Content about AI-generated fake videos and audio exploits fears about truth and authenticity. Research shows people dramatically overestimate their ability to detect deepfakes while simultaneously fearing their proliferation.

Anxiety Amplification Mechanism: Erodes trust in information sources and personal judgment, creating chronic uncertainty and social anxiety. - "ChatGPT Becomes Sentient and Demands Rights" - The Consciousness Terror

Headlines suggesting AI has achieved consciousness or sentience (often misreporting technical developments) trigger fears about being replaced by superior beings. These stories exploit science fiction narratives embedded in popular culture.

Anxiety Amplification Mechanism: Triggers deep-seated fears about human obsolescence and being replaced by superior intelligence.

The Neurological Impact: How AI Anxiety Rewires Our Brains

The constant exposure to AI-related fear content creates measurable changes in brain function. Research reveals that AI models themselves can experience "anxiety" when exposed to traumatic narratives, suggesting that humans exposed to similar content experience even more pronounced effects.

Studies show that exposure to anxiety-inducing AI content leads to:

- Elevated cortisol levels from chronic fight-or-flight activation[18]

- Disrupted sleep patterns from evening screen exposure to stressful content[12][13]

- Decreased cognitive flexibility as the brain focuses on perceived threats[16]

- Social withdrawal as digital anxiety spills into real-world interactions

The Business Model Behind Our AI Anxiety

The most troubling aspect of AI fear-mongering is that it's often deliberately manufactured for commercial gain. Research shows that some AI companies themselves may be exaggerating existential threats to promote regulatory frameworks that benefit established players. This creates a perverse incentive structure where public anxiety translates directly to:

- Increased engagement and advertising revenue for sensational content

- Regulatory advantages for established AI companies over open-source alternatives

- Market consolidation as fear drives users toward "trusted" platforms

- Investment flows toward companies positioned as AI safety leaders

Breaking Free from the AI Anxiety Cycle

Understanding how AI-era clickbait manipulates our psychology is the first step toward mental health protection. Key strategies include:

- Cognitive Inoculation Recognize the specific techniques used in AI fear-mongering: existential threat framing, job replacement panic, and consciousness attribution. When you identify these patterns, you can evaluate content more rationally.

- Source Diversification Avoid getting AI information exclusively from engagement-driven platforms. Seek out academic sources, direct technical documentation, and balanced reporting that acknowledges both benefits and risks.

- Temporal Perspective Remember that transformative technologies typically take decades to fully deploy. Historical precedent shows that technological change, while disruptive, has consistently created new opportunities alongside displacement.

- Mindful Consumption Implement specific boundaries around AI-related content consumption. Research shows that mindfulness practices can actually reduce AI model anxiety, suggesting even greater benefits for humans.

The Path Forward: Responsible AI Discourse

As AI continues evolving, we need journalism and public discourse that prioritizes accuracy over engagement, nuance over sensation. This means:

- Balanced reporting that discusses both opportunities and challenges

- Technical accuracy in describing AI capabilities and limitations

- Historical context that situates AI development within broader technological evolution

- Focus on human agency in shaping AI development and deployment

The stakes couldn't be higher. The research is clear: fear-based narratives about AI are creating measurable psychological harm while potentially hindering beneficial AI development. By understanding how AI-era clickbait exploits our psychology, we can protect our mental health while remaining appropriately informed about one of the most significant technological developments of our time.

Our anxiety about AI says more about our media consumption habits than about AI itself. By changing how we engage with AI-related content, we can reduce unnecessary stress while maintaining the critical thinking necessary to navigate our technological future wisely.

If you're struggling with anxiety related to news consumption or social media use, consider speaking with a mental health professional. The strategies outlined in this article are for general wellness and should not replace professional medical advice.

Sources:

- Delving into: the quantification of Ai-generated content on the internet

- AI Slop: The Unseen Flood of AI-Generated Content

- Slopaganda: The interaction between propaganda and generative AI

- The Impact of Online Information Overload on College Students

- Social media integration and adults' psychological distress

- Digital Technology and Mental Health: Unveiling the Psychological Impact

- How Social Media Information Overload Can Affect Mental Health

- Influence of information anxiety on core competency of registered nurses

- Fear of artificial intelligence? NLP, ML and LLMs based discovery of AI-phobia

- AI Phobia: How News Headlines Shape Public Fear of Artificial Intelligence

- How to Avoid Falling for AI Fear Mongering on Social Media

- What's Behind AI Fear-Mongering?

- UNESCO Graphic Novel "Inside AI": Issues of Semiotic Interpretation

- No, AI probably won't kill us all – and there's more to this fear campaign

- AI Expert Claims Big Tech Using Fear of AI To Scare Up Profits

- 48 Jobs AI Will Replace by 2025: Check If Yours is at Risk

- Newspaper headlines: 'AI will end work' and 'the wrath of Ciarán'

- Media has a blind spot when covering the AI panic

- A Framework for Studying the Psychology of Human–AI Interaction

- The Psychology of Deepfakes: Why We Fall For Them